As artificial intelligence (AI) technology continues to advance, there has been a significant increase in the use of large language models (LLMs) for natural language processing tasks, such as text generation and conversation. However, these models often lack external knowledge and struggle with contextual understanding, resulting in inaccurate or irrelevant responses.

This is where retrieval-augmented generation (RAG) comes in. RAG is a technique that incorporates external data into LLMs to enhance their response generation, making them more accurate and relevant. In this article, we will delve deeper into the concept of RAG, how it works, and how businesses can optimize its use for improved retrieval enhancement.

Introduction to RAG

Definition of RAG

Retrieval-Augmented Generation (RAG) is a technique that enhances Large Language Models (LLMs) by incorporating external knowledge into their response generation. LLMs are AI systems trained on massive datasets, enabling them to produce human-like text and perform natural language processing tasks. RAG takes this a step further by adding external data to the LLM’s knowledge base, making it more comprehensive and improving its accuracy and relevance.

Retrieval-Augmented Generation (RAG) is a method that enriches Large Language Models (LLMs) by integrating external knowledge to improve their generation of responses

Importance of RAG in SEO

Search engine optimization (SEO) has become an integral part of business success, and RAG plays a significant role in the digital marketing strategy. The use of LLMs in search algorithms has increased in recent years, with Google’s BERT model being a prime example. However, these models often struggle with understanding contextual information and providing accurate responses. RAG addresses this issue by incorporating external data, making it a valuable tool in SEO for improved search results.

RAG vs Traditional LLMs

Traditional LLMs rely solely on the data they were initially trained on, limiting their knowledge and understanding of different contexts. RAG, on the other hand, expands the LLM’s knowledge base by incorporating external data, allowing for a more comprehensive understanding and better response generation. This makes RAG an essential tool for businesses looking to improve their AI-powered systems’ performance.

Understanding RAG

What is RAG?

As mentioned earlier, RAG is a technique that incorporates external data into LLMs to enhance their response generation. But how exactly does it work? Let’s dive deeper into the mechanics of RAG.

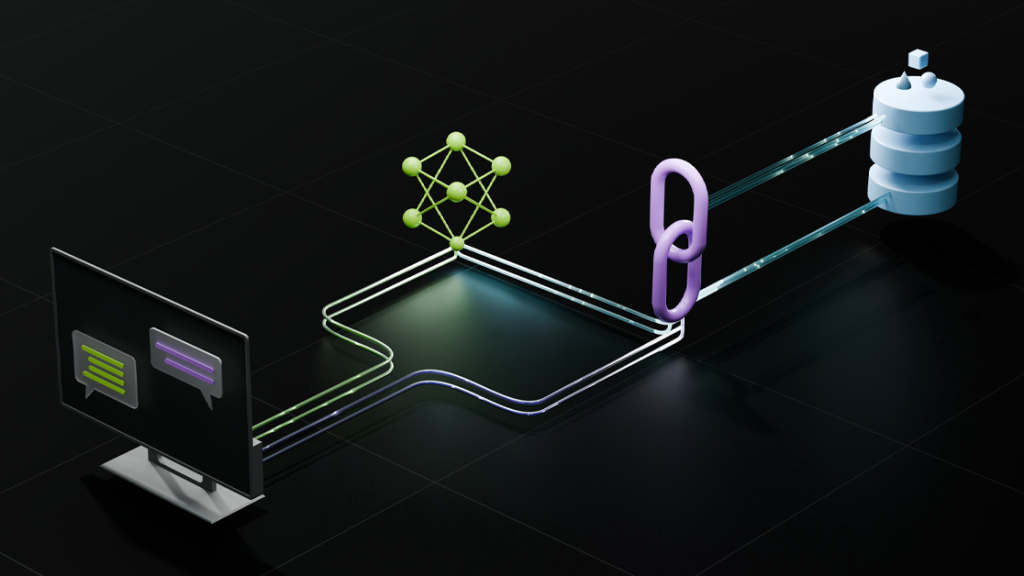

How does RAG Work?

External Data Preparation

The first step in implementing RAG is to collect relevant data from external sources. This data can be in various forms, such as APIs, databases, or text documents. It could include customer reviews, product descriptions, or even employee data. This data is then converted into a numerical representation using an embedding model, creating a knowledge repository accessible to AI-powered models.

Retrieval of Relevant Information

When a user submits a query, it is transformed into a vector representation and matched against the knowledge repository. For instance, a chatbot seeking information on a leave policy would retrieve documents related to leave policies and individual employee leave histories. The relevance of these documents is determined through mathematical computations and vector representations, ensuring that the retrieved data is accurate and useful for the context.

LLM Prompt Enhancement

Finally, RAG incorporates the retrieved information into the prompt that the LLM utilizes to generate responses. This is achieved using prompt engineering techniques to ensure effective communication with the LLM. The LLM is then able to use the additional external knowledge to generate more accurate and relevant responses, making it a valuable tool for improving AI-powered systems’ performance.

Benefits of RAG

Enhanced Accuracy

One of the primary benefits of RAG is its ability to improve the accuracy of AI-powered systems. By incorporating external data, RAG provides the LLM with a more comprehensive understanding of different contexts, resulting in more precise and relevant responses. This is especially crucial in industries like finance or healthcare, where accurate and timely information is essential.

One of the main advantages of RAG is its capacity to enhance the precision of AI-driven systems

Improved Relevance

RAG also greatly enhances the relevance of generated responses. LLMs often struggle with understanding contextual information, resulting in irrelevant or even incorrect responses. RAG addresses this issue by expanding the LLM’s knowledge base with external data, making it more accurate and relevant to the given context. This is particularly beneficial for businesses looking to improve customer satisfaction and engagement through AI-powered systems.

Cost-Effectiveness

As compared to other methods of improving LLM output, such as increasing training data or fine-tuning the model, RAG is a cost-effective solution. It allows businesses to optimize their existing AI-powered systems without incurring significant costs. By utilizing external data, RAG improves the LLM’s performance, making it a valuable tool for businesses of all sizes.

Use Cases of RAG

Chatbots and Virtual Assistants

Chatbots and virtual assistants have become increasingly popular in various industries, providing 24/7 customer support and assistance. However, these AI-powered systems are often limited by their initial training data, resulting in repetitive or irrelevant responses. RAG can greatly improve the performance of chatbots by incorporating external data, making them more accurate and relevant to customer queries.

Knowledge Base Creation

For businesses with large amounts of data, creating and maintaining a knowledge base can be a tedious and time-consuming task. With RAG, this process can be significantly streamlined. By using RAG, businesses can continuously update and expand their knowledge base with external data, ensuring its relevance and usefulness for AI-powered systems.

Content Generation and Curation

RAG can also be used to generate and curate content for websites and social media platforms. By incorporating external data, businesses can create more accurate and relevant content that resonates with their target audience. This can greatly improve customer engagement and lead to increased website traffic and conversions.

Question-Answering Systems

Question-answering systems, such as those used in online forums or customer service portals, can benefit greatly from RAG. By incorporating external data into their knowledge base, these systems can provide more accurate and relevant responses to user queries, improving overall customer satisfaction and reducing response times.

Implementing RAG in Business

To effectively implement RAG in business, there are a few key steps that need to be taken:

Identifying Relevant Data Sources

The first step in implementing RAG is identifying relevant external data sources. These could include customer feedback, product descriptions, employee data, or even publicly available information. Businesses must ensure that the data collected is relevant to their industry and context to improve the LLM’s performance.

Choosing the Right Embedding Model

The embedding model used to convert external data into a numerical representation plays a significant role in the success of RAG. Businesses must carefully evaluate and choose the right embedding model, considering factors such as data quality, model performance, and compatibility with their existing AI systems.

Prompt Engineering Techniques

Prompt engineering techniques are crucial for effective communication between RAG and LLMs. Businesses must ensure that the prompts used are clear, concise, and relevant to the context. This will help the LLM generate more accurate and relevant responses, improving overall performance.

Evaluating RAG Performance

Finally, it is essential to continuously evaluate and measure the performance of RAG implementation. This could be through A/B testing or collecting feedback from users. By regularly evaluating RAG performance, businesses can identify any issues and make necessary changes for continued improvement.

Challenges and Limitations of RAG

While RAG has proven to be an effective tool for enhancing retrieval in AI-powered systems, there are some challenges and limitations businesses need to be aware of:

Ensuring the quality and reliability of external data sources remains one of the foremost challenges associated with RAG

Data Quality and Bias

One of the most significant challenges with RAG is ensuring the quality and reliability of external data sources. If the data collected is biased or inaccurate, it can greatly affect the performance of RAG and result in incorrect or irrelevant responses. Businesses must carefully evaluate and monitor their chosen data sources to avoid these issues.

Integration with Existing Systems

Another challenge businesses may face when implementing RAG is integrating it with their existing AI-powered systems. This could require significant changes to the system architecture and may result in compatibility issues. It is important to carefully plan and test RAG integration to avoid disruptions to existing systems.

Limited Domain Expertise

RAG relies heavily on external data sources, which may not always be readily available. In industries with limited publicly available information or niche markets, it may be challenging to find relevant data for RAG. This could limit the effectiveness of RAG in these contexts.

Conclusion

RAG (Retrieval-Augmented Generation) is a valuable technique for enhancing retrieval in AI-powered systems. By incorporating external data into LLMs, RAG improves the accuracy and relevance of generated responses, making it a crucial tool for businesses looking to improve their digital presence and customer engagement. With proper implementation and careful consideration of challenges and limitations, businesses can optimize RAG for improved retrieval enhancement and stay ahead in the ever-evolving world of AI technology.